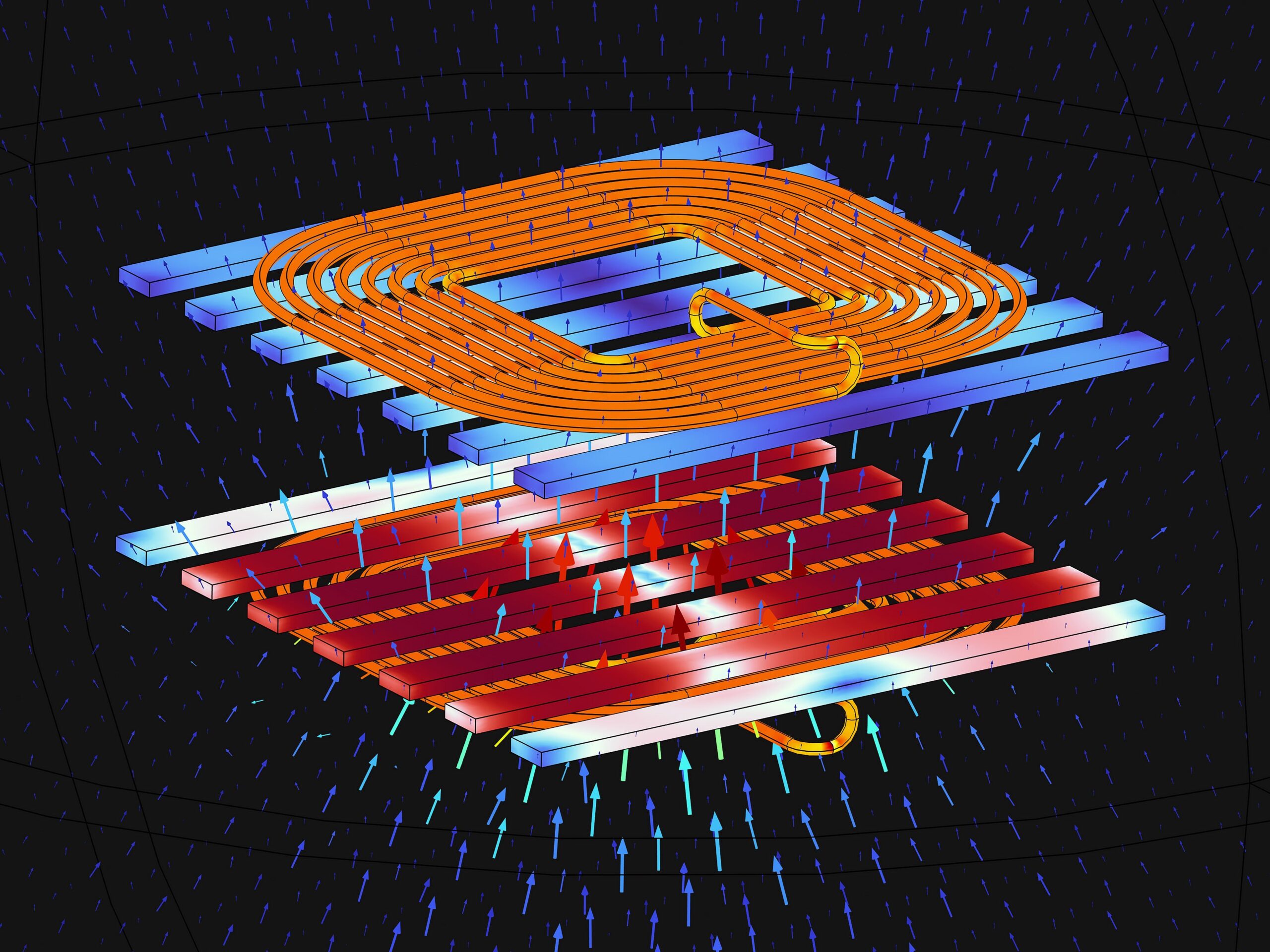

The Basque city of San Sebastián is beginning to play an interesting leading role in the radically different field of quantum technology. The official launch of IBM Quantum System Two, the most advanced quantum computer of the ‘Blue Giant,’ happened in Donostia-San Sebastián. This infrastructure, which the technology company has implemented on the main campus of the Ikerbasque scientific foundation in Gipuzkoa, aims to solve problems that have remained unsolvable in combination with classical computing.

The installation in San Sebastián is the first of its kind in Europe and the second worldwide, after Kobe, Japan. It stems from a 2023 strategic agreement between IBM and the Basque Government that brought the technology company’s advanced quantum machine to Spain. As Mikel Díez, director of Quantum Computing at IBM Spain, explains to Computerworld, the system hybridizes quantum and classical computing to leverage the strengths of both. “At IBM, we don’t see quantum computing working alone, but rather alongside classical computing so that each does what it does best,” he says.

Is IBM Quantum System Two, launched today in San Sebastián, fully operational?

Yes, with today’s inauguration, IBM Quantum System Two is now operational. This quantum computer architecture is the latest we have at IBM and the most powerful in terms of technological performance. From now on, we will be deploying all the projects and initiatives we are pursuing with the ecosystem on this computer, as IBM’s participation in this program is not exclusively infrastructure-based, but also involves promoting joint collaboration, research, training, and other programs.

There are academic experts who argue that there are no 100% quantum computers yet, and there’s a lot of marketing from technology companies. Is this new quantum computer real?

Back in 2019, we launched the first quantum computer available and accessible on our cloud. More than 30,000 people connected that day; since then, we’ve built more than 60 quantum computers, and as we’ve evolved them, we currently have approximately 10 operating remotely from our cloud in both the United States and Europe. We provide access, from both locations, to more than 500,000 developers. Furthermore, we’ve executed more than 3 trillion quantum circuits. This quantum computer, the most advanced to date, is a reality, it’s tangible, and it allows us to explore problems that until now couldn’t be solved. However, classical infrastructure is also needed to solve these problems. We don’t envision quantum computing going it alone, but rather working alongside classical computing so that each does what it does best. What do quantum computers do best? Well, exploring information maps, and with absolutely demanding and exponential amounts of data.

So IBM’s proposal, in the end, is a hybrid of classical computing with quantum computing.

Correct. But, I repeat, quantum computers exist, we have them physically. In fact, the one we’re inaugurating today is the first of its kind in Europe, the second in the world.

This hybrid proposal isn’t really a whim; it’s done by design. For example, when we need to simulate how certain materials behave to demand the best characteristics from them, this process is designed with an eye to what we want to simulate on classical computers and what we want to simulate on quantum computers, so that the sum of the two is greater than two. Another example is artificial intelligence, for which we must identify patterns within a vast sea of data. This must be done from the classical side but also where it doesn’t reach, in the quantum side, so that the results of the latter converge throughout the entire artificial intelligence process. That’s the hybridization we’re seeking. In any case, I insist, in our IBM Quantum Network, we have more than 300 global organizations, private companies, public agencies, startups, technology centers, universities running real quantum circuits.

And, one clarification. Back in 2019, when we launched our first quantum computer, with between 5 and 7 qubits, what we could attempt to do with that capacity could be perfectly simulated on an ordinary laptop. After the advances of these years, being able to simulate problems requiring more than 60 or 70 qubits with classical technology is not possible even on the largest classical computer in the world. That’s why what we do on our current computers, with 156 qubits, is run real quantum circuits. They’re not simulated: they run real circuits to help with artificial intelligence problems, optimization of simulation of materials, emergence of models all that kind of thing.

The Basque Government’s BasQ program includes three types of initiatives or projects. The first are related to the evolution of quantum technology itself: how to continue improving error correction, how to identify components of quantum computers, and how to optimize both these and the performance of these devices. From a more scientific perspective, we are working on how to represent the behavior of materials so that we can improve the resistance of polymers, for example. This is useful in aeronautics to improve aircraft suspension. We are also working on time crystals, which, from a scientific perspective, seek to improve precision, sensor control, and metrology. Finally, a third line relates to the application of this technology in industry; for example, we are exploring how to improve the investment portfolio for the banking sector, how to optimize the energy grid , and how to explore logistics problems.

What were the major challenges in launching the machine you’re inaugurating today? Why did you choose the Basque Country to implement your second Quantum System Two?

Before implementing a facility of this type in a geographic area, we assess whether it makes sense based on four main pillars. First, whether the area has the capacity, technological expertise , talent and workforce, a research and science ecosystem, and, finally, an industrial fabric. I recall that IBM currently has more than 40 quantum innovation centers around the world, and this is one of them, with the difference that this is the first to have a machine in Europe.

When evaluating the Basque Country option, we saw that the Basque Government already had supercomputing facilities, giving them technological experience in managing these types of facilities from a scientific perspective. They also had a scientific policy in place for decades, which, incidentally, had defined quantum physics as one of its major lines of work. They had long-standing talent creation, attraction, and retention policies with universities. And, finally, they had an industrial network with significant expertise in digitalization technologies, artificial intelligence, and industrial processes that require technology. In other words, the Basque Country option met all the requirements.

He said the San Sebastián facility is the same as the one they’ve implemented in Japan. So what does IBM have in Germany?

What we have in Germany is a quantum data center, similar to our cloud data centers , but focused on serving organizations that don’t have a dedicated computer on-site for their ecosystem. But in San Sebastián, as in Kobe (Japan), there’s an IBM System Two machine with a modular architecture and a 156-qubit Heron processor.

Just as we have a quantum data center in Europe to provide remote service, we have another one in the United States, where we also have our quantum laboratory, which is where we are building, in addition to the current system (System Two), the one that we will have ready in 2029, which will be fault-tolerant.

Look, computers are computers, they’re real, and they’re real. The nuance may come from their capabilities, and it’s true that the one we’re inaugurating today in San Sebastián is a noisy computer, and this, in some ways, still limits certain features.

IBM’s roadmap for quantum computing is as follows. First, by 2026, just around the corner, we hope to discover the quantum advantage, which will come from using existing real physical quantum computers alongside classical computers for specific processes. That is, we’re not just focused on whether it’s useful to have a quantum computer to run quantum circuits. Rather, as I mentioned before, by applying the capabilities of real quantum computers, alongside classical ones, we’ll gain an advantage in simulating new materials, simulating research into potential new drugs, optimizing processes for the energy grid , or for financial investment portfolios.

The second major milestone will come in 2029, when we expect to have a fault-tolerant machine with 200 logical qubits commercially available. The third milestone is planned for 2033, when we will have a fault-tolerant machine with 2,000 logical qubits—that’s 10 times more logical qubits, meaning we’ll be able to perform processing at scale without the capacity limitations that exist now, and qubits without fault tolerance.

You mentioned earlier that current quantum computers, including the one in San Sebastián, are noisy. How does this impact the projects you intend to support?

What we, and indeed the entire industry, are looking for when we talk about the capacity of a quantum computer is processing speed, processing volume, and accuracy rate. The latter is related to errors. The computer we inaugurated here has a rate that is on the threshold of one error for every thousand operations we perform with a qubit. Although it’s a very, very small rate, we are aware that it can lead to situations where the result is not entirely guaranteed. What are we doing at this current time? Post-processing the results we obtain and correcting possible errors. Obviously, this is a transitional stage; what we want is for these errors to no longer exist by 2029, and for the results to no longer need to be post-processed to eliminate them. We want error correction to be automatic, as is the case with the computers we use today in our daily lives.

But even today, with machines with these flaws, we are seeing successes: HSBC, using our quantum computing, has achieved a 34% gain in estimating the probability of automated government bond trades being closed.

So the idea they have is to improve quantum computing along the way, right?

Exactly. It’s the same as the project to go to the Moon. Although the goal was that, to go to the Moon, other milestones were discovered along the way. The same thing happens with quantum computing; the point is that you have to have a very clear roadmap, but IBM has one, at least until 2033.

How do you view the progress of competitors like Google, Microsoft, and Fujitsu?

In quantum computing, there are several types of qubits—the elements that store the information we want to use for processing—and we’re pursuing the option we believe to be the most robust: superconducting technology. Ours are superconducting qubits, and we believe this is a good choice because it’s the most widely recognized option in the industry.

In quantum computing, it’s not all about the hardware, and in this sense, qubits and what we call the stack of all the levels that must be traversed to reach a quantum computer are important. The important thing is to look at how you are at all those levels, and there, well, there are competitors who work more on one part than another, but, once again, what industries and science value is a supplier having the complete stack because that’s what allows them to conduct experiments and advance applications.

A question I’ve been asked recently is whether we’ll have quantum computers at home.

So, will we have them?

This is similar to what happens with electricity. Our homes receive 220 volts through the low-voltage meter; we don’t need high voltage or large transformers, which are found in large centers on the outskirts of cities. It’s the same in our area: we’ll have data centers with classical and quantum supercomputers, but we’ll be able to see the value in our homes when new materials, improved capabilities of artificial intelligence models, or even better drugs are discovered.

Speaking of electricity, Iberdrola is one of the companies using the new quantum computer.

There are various industrial players in the Basque Country’s quantum ecosystem, in addition, of course, to the scientific hub. Iberdrola recently joined the Basque Government’s BasQ program to utilize the capabilities of our computer and the entire ecosystem to improve its business processes and optimize the energy grid. They are interested in optimizing the predictive maintenance of all their assets, including home meters, wind turbines, and the various components in the energy supply chain.

What other large companies will use the new computer?

In the Basque ecosystem, we currently have more than 30 companies participating, along with technology and research centers. These are projects that have not yet been publicly announced because they are still under development, although I can mention some entities such as Tecnalia, Ikerlan, the universities of Deusto and Modragón, startups such as Multiverse and Quantum Match.

How many people are behind the San Sebastián quantum computer project?

There will be about 400 researchers in the building where the computer is located, although these projects involve many more people.

Spain has a national quantum strategy in collaboration with the autonomous communities. Is it possible that IBM will bring another quantum machine to another part of the country?

In principle, the one we have on our roadmap is the computer implemented for the Basque Government. In Andalusia, we have inaugurated a quantum innovation center, but it’s a project that doesn’t have our quantum computer behind it. That is, people in Andalusia will be able to access our European quantum data center [the one in Germany]. In any case, the Basque and Andalusian governments are in contact so that, should they need it, Andalusia can access the quantum computer in San Sebastián.

What advantages does being able to access a quantum machine in one’s own country bring?

When we talked earlier about how we want to hybridize quantum computing with classical computing, well, this requires having the two types of computers adjacent to each other, something that happens in the San Sebastián center, because the quantum computer is on one floor and the classical computer will be on the other floor. Furthermore, there are some processes that, from a performance and speed perspective, require the two machines to be very close together.

And, of course, if you own the machine, you control access: who enters, when, at what speed obviously, in the case of quantum data centers like the one in Germany, you have to go through a queue, and there may be more congestion if people from many European countries enter at the same time.

On the other hand, and this is relevant, at IBM we believe that having our quantum machines in a third-party facility raises the quality standards compared to having them only in our data centers or laboratories controlled by us.

Beyond this, having a quantum machine in the country will generate an entire ecosystem around this technology and will be a focal point for attracting talent not only in San Sebastián but throughout Spain.

Will there be another computer of this type in Europe soon?

We don’t have a forecast for it at the moment, but who could have imagined four years ago that there would be a computer like this in Spain, and in the Basque Country in particular?

We’ve talked a lot, but is there anything you’d like to highlight?

As part of the Basque Government’s quantum program, we’ve trained more than 150 people from different companies, technology centers, startups, and more over the past two years. We’ve also created an ambassador program to help identify specific applications. We seek to reach across the entire ecosystem so that there are people with sufficient knowledge to understand where value is generated by using quantum technology.

This interview originally appeared on Computerworld Spain.