🛸 The Complete Guide to Unidentified Aerial Phenomena

UAP sightings are increasing globally. Whether youre curious observer or serious researcher, this comprehensive guide covers everything from recognizing genuine anomalies to properly documenting phenomena for scientific review.

Section 1: Historical Context & Current Landscape

UAP History

Unidentified aerial phenomena are not new. Military pilots, civilians, and scientific observers have documented anomalies for decades.

Key Historical Events:

- Roswell Incident (1947) – Early official denial followed by decades of secrecy

- CIA U-2 Program (1950s) – Military misidentifications fueled UFO reports

- Project Blue Book (1952-1969) – U.S. Air Force investigation, 12,618 reports, 701 unidentified

- Pentagon UAP Incidents (2004-2015) – Navy pilot encounters with Tic-Tac objects

- 2021 U.S. Intelligence Report – First official government UAP assessment

Current Scientific Acceptance

Recent government disclosures have legitimized UAP research in academic circles. Universities, think tanks, and military institutions now formally study these phenomena.

The shift from “UFO conspiracy” to “serious scientific inquiry” changed everything for legitimate researchers.

🔒 Protect Your Research

Secure UAP databases and sensitive research findings with enterprise-grade encryption protocols.

→ Research Security with Surfshark

FTC Disclosure: This post contains affiliate links. We may earn a commission if you purchase through these links at no additional cost to you. See our Affiliate Disclosure for details.

Section 2: What Makes an Observation Credible

Eliminating Conventional Explanations

Most UAP reports resolve into conventional explanations: aircraft, satellites, weather balloons, drones, or atmospheric phenomena.

Common Misidentifications:

- Aircraft: Helicopters with spotlight, military jets, commercial aircraft at unusual angles

- Satellites: ISS passes, Starlink trains, retiring satellites visible at dawn/dusk

- Atmospheric: Ball lightning, plasma phenomena, rare atmospheric optical effects

- Drones: Commercial drones, modified aircraft, experimental government systems

- Hoaxes: Intentional misreporting, misidentified toys/balloons

Criteria for Genuinely Anomalous Observations

Genuine anomalies typically display:

- Consistent geometry and controlled movement patterns

- Apparent defiance of known physics (hypersonic acceleration, instant directional change, hovering without visible propulsion)

- Multiple independent observer confirmation

- Technical instrument corroboration (radar, infrared, electromagnetic detection)

- Absence of plausible conventional explanation after rigorous analysis

Section 3: Observation Best Practices

Equipment Setup

Optical Systems:

- High-quality binoculars (10×50 or 15×70 magnification)

- Computerized telescopes with GPS tracking and data logging

- 4K video cameras capable of 200x zoom

- Infrared cameras detecting heat signatures visible to naked eye

Detection Systems:

- EMF (electromagnetic field) meters

- Radio frequency spectrum analyzers

- Meteorological stations for environmental context

Observation Protocols

Before Observation:

- Check astronomical forecasts for satellite passes and celestial events

- Monitor aircraft tracking systems for scheduled flights

- Review weather patterns for atmospheric phenomena probability

- Test all equipment for functionality

During Observation:

- Maintain objective viewpoint; avoid preconceived conclusions

- Document precise timing using GPS-synced instruments

- Record detailed descriptions of object appearance, motion, color

- Note environmental conditions (temperature, humidity, wind, visibility)

- Use multiple instruments for corroboration

🖥️ Host Your Research

Professional hosting infrastructure supports comprehensive UAP databases and collaborative research platforms.

→ Enterprise Hosting with Contabo

FTC Disclosure: This post contains affiliate links. We may earn a commission if you purchase through these links at no additional cost to you. See our Affiliate Disclosure for details.

Section 4: Documentation Standards

Comprehensive Reporting Framework

Essential Information for Every Report:

- Date & Time: Precise timestamp (GPS-verified if possible)

- Location: GPS coordinates, altitude, terrain description

- Duration: Total observation time, any gaps

- Visual Description: Shape, size, color, luminosity, surface features

- Motion: Speed estimates, directional changes, hovering capability

- Sound: Audible phenomena or unusual silence

- Environmental Context: Weather, lighting conditions, visibility

- Corroboration: Independent witnesses, instrumental confirmation

Video & Photographic Standards

Recording Best Practices:

- High-resolution, low-light capable cameras

- Stable platform (tripod, gimbal) to reduce motion artifacts

- Audio recording for environmental context

- Metadata preservation (EXIF data contains timestamp, location, camera specs)

- Multiple angles and zoom levels for analysis

Section 5: Scientific Analysis Methods

Image & Video Analysis

Forensic Techniques:

- Authentication analysis detecting digital manipulation

- Spectral analysis identifying light signatures

- Motion vector analysis measuring acceleration patterns

- Size estimation using reference objects or parallax

- Thermal signature analysis from infrared recordings

Statistical Analysis

Rigorous Methods:

- Clustering analysis identifying temporal and geographic patterns

- Statistical testing for significant correlations

- Confidence intervals for observations with multiple variables

- Elimination of false patterns through random distribution testing

Section 6: Classification Systems

NARCAP Categorization

The National Aviation Reporting Center on Anomalous Phenomena (NARCAP) provides standardized classification:

- Type A: Distinct shapes; well-corroborated data

- Type B: Point-source objects; credible reports

- Type C: Ambiguous geometry; some corroboration

- Type D: Limited information; single witness reports

Phenomenon Characteristics

Categorizing Observed Behavior:

- Electromagnetic Effects: Vehicle interference, power disruptions

- Luminosity: Steady, pulsating, changing color

- Motion Patterns: Hovering, instant acceleration, gravity-defying maneuvers

- Size Estimates: Relative to known reference objects

🧹 Maintain Your Observatory

Automated systems keep your research facility organized so you stay focused on the skies.

→ Facility Management with Ecovacs

FTC Disclosure: This post contains affiliate links. We may earn a commission if you purchase through these links at no additional cost to you. See our Affiliate Disclosure for details.

Section 7: Common Pitfalls

Cognitive Biases in Research

- Confirmation Bias: Seeking information confirming preconceived beliefs

- Pattern Recognition: Seeing meaningful patterns in random data

- Sensory Misinterpretation: Perspective and size misjudgment at distance

- Expectation Bias: What you expect to see influences what you perceive

Methodological Errors

- Inadequate baseline elimination of conventional explanations

- Single-witness observations without corroboration

- Insufficient instrumental verification

- Premature conclusion before thorough analysis

- Insufficient documentation for peer review

Section 8: Contributing to Scientific Understanding

Research Network Integration

Serious researchers contribute to institutional databases and academic analysis efforts.

Credible Organizations:

- NARCAP (National Aviation Reporting Center on Anomalous Phenomena)

- MUFON (Mutual UFO Network)

- Scientific organizations (Harvard, Stanford publishing research)

- Government research programs (Pentagon UAP Task Force)

Publication & Peer Review

Academic publication legitimizes research. Submit findings to peer-reviewed journals despite rejection challenges.

Science advances through rigorous challenge. Expect skepticism; respond with better data.

Section 9: Advanced Techniques

Instrumentation Arrays

Networked observation stations provide multi-station confirmation and triangulation capabilities.

Coordinated networks across regions increase detection probability and analytical confidence.

Predictive Modeling

Historical patterns may predict hotspots and optimal observation times.

Machine learning applications identify subtle patterns invisible to human analysis.

Final Thoughts

UAP research has transitioned from fringe topic to legitimate scientific inquiry. The questions “Are we alone?” and “Is advanced technology visiting Earth?” demand rigorous investigation.

Whether youre casual observer or dedicated researcher, approach the subject with scientific rigor, intellectual humility, and genuine curiosity.

Keep watching the skies. The universe is far stranger than we imagine. 🌌

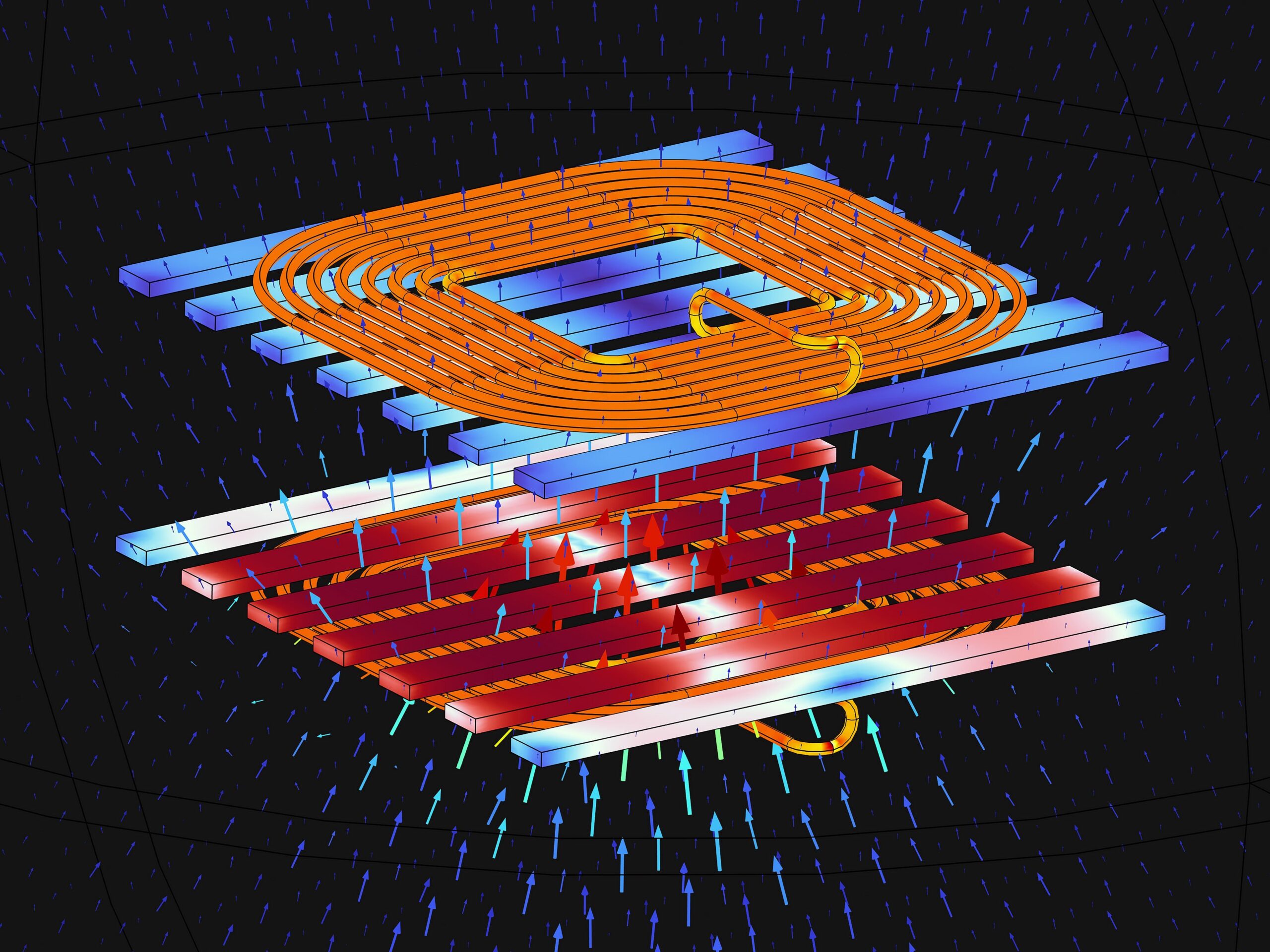

To cool computer chips with lasers, the team at Maxwell Labs plans to place a grid of photonic cold plates on top of the chip substrate. In their demo setup, a thermal camera detects hot spots coming from the chip. A laser then shines onto the photonic cold plate next to the hot spot, stimulating the photonic process that results in cooling. The photonic cold plate [inset] consists of a coupler that guides light in and out of the plate, the extractor where anti-Stokes fluorescence occurs, the back reflector that prevents light from entering the computer chip, and a sensor that is designed to detect hot spots.GygInfographics.com

To cool computer chips with lasers, the team at Maxwell Labs plans to place a grid of photonic cold plates on top of the chip substrate. In their demo setup, a thermal camera detects hot spots coming from the chip. A laser then shines onto the photonic cold plate next to the hot spot, stimulating the photonic process that results in cooling. The photonic cold plate [inset] consists of a coupler that guides light in and out of the plate, the extractor where anti-Stokes fluorescence occurs, the back reflector that prevents light from entering the computer chip, and a sensor that is designed to detect hot spots.GygInfographics.com The demo setup at Maxwell Labs demonstrates how current computer chips can be cooled with lasers. A photonic cold plate is placed on top of the chip. A thermal camera images the hot spots coming from the chip, and a laser is directed at the photonic cold plate directly above the hot spot.Maxwell Labs

The demo setup at Maxwell Labs demonstrates how current computer chips can be cooled with lasers. A photonic cold plate is placed on top of the chip. A thermal camera images the hot spots coming from the chip, and a laser is directed at the photonic cold plate directly above the hot spot.Maxwell Labs