Wireless connectivity is standard across corporate campuses, in warehouses and factories, and even in remote locations. But what about wireless power? Can we get rid of all cables?

Many of us are already using wireless chargers for our cellphones and other devices. But induction chargers aren’t a complete solution since they require very close proximity between the device and the charging station. So, what can enterprises try? Some organizations are deploying midrange solutions that use radio signals to transmit power through the air, and others are even experimenting with laser power transmission.

Here are three emerging options for wireless power in the enterprise, and top use cases to consider.

Induction charging

Induction charging is about more than saving users the two seconds it would take them to plug a cord into their device. It can also be used to power vehicles, such as factory vehicles, or even cars and buses. Also known as near-field charging, it’s the single largest sector of the global wireless power market, according to Coherent Market Insights, accounting for 89% of 2025’s $16.6 billion wireless power market. Of that, consumer electronics accounted for 74%.

Detroit launched its first electric roadway in 2023, allowing vehicles to charge their batteries wirelessly when they park on, or drive over, that particular section of the road. It requires special equipment to be installed in the vehicle, and it can be pricy for individual cars, but it can be a useful option for buses or delivery vans. The city plans to add a second segment next year, reports the American Society of Civil Engineers.

The first commercial user will be UPS, which will also add stationary wireless charging at its Detroit facility. “This innovative approach will revolutionize how we power our electric vehicles and drive fleet electrification forward,” said Dakota Semler, CEO and co-founder of electric vehicle manufacturer Xos, in a press release.

Florida plans to open an electrified road in 2027, and, in California, UCLA is testing an in-road inductive charging system for campus buses that is planned to be in operation by 2028. The goal is to have the project ready in time for the 2028 Olympics Games in Los Angeles.

Utah plans to add in-motion charging lanes to streets in the next ten years, and the first one scheduled to be installed later this year, as part of its electrified transportation action plan. A major impetus is the 2034 Winter Olympics, which will be held in Salt Lake City.

Early adopters in Utah include Utah PaperBox and Boise Cascade’s Salt Lake distribution hub. There’s also an electrified roadway, currently in the pilot and development phase, at the Utah Inland Port, which will provide in-motion charging for freight vehicles. Construction of the world’s first one-megawatt wireless charging station has already begun at this facility, which will provide 30-minute fast charging to parked electric semi trucks.

Europe is even further ahead. Sweden began working on the first electric road in 2018. In 2021, the one-mile stretch of electrified road was able to charge two commercial vehicles simultaneously, even though they had different battery systems and power requirements. In 2022, an electric bus began operating regularly on the road, charging while driving over it.

The idea is that wireless in-motion charging will allow commercial vehicles to spend more time on the road and less time parked at charging stations — and less wasted time driving to and from the charging stations. It also allows vehicles to have smaller batteries and wider ranges. If the technology goes mainstream on public roads, drivers would be able to pay for the electricity they get in a way similar to how the E-Z Pass system works. But a more immediate application of the technology is the way that UPS is deploying — to charge up vehicles in a corporate facility.

There are several vendors that offer this technology:

- HEVO offers wireless charging pads for garages and parking lots for both residential and commercial markets.

- Plugless Power is another company offering wireless charging for parked vehicles, and claims to have provided 1 million charge hours to its customers, which include Google and Hertz. It provided the first wireless charging stations for Tesla Model S cars, and its wireless charging system for driverless shuttlebuses was the first of its kind in Europe.

- WAVE offers wireless charging system for electric buses, and its Salt Lake City depot can charge multiple vehicles automatically using inductive power. In addition to buses, other uses cases include ports such as the Port of Los Angeles, and warehouse and distribution. In warehouses, it can provide power to electric yard trucks, forklifts, and other equipment.

- InductEV offers high-power, high-speed wireless charging for commercial vehicles such as city buses, auto fleets and industrial vehicles, with on-route wireless charging solutions deployed in North America and Europe. It was named one of Time magaaine’s best inventions of 2024. Seattle’s Sound Transit plans to have nearly half of its electric buses being charged by on-route wireless chargers from InductEV, and municial bus charging is already operational in Indianapolis, Martha’s Vineyard, and Oregon. The AP Moeller Maersk Terminal in Port Elizabeth, NJ is also using the company’s wireless chargers for its electric port tractors.

Other companies offering wireless charging for industrial vehicles such as automated guided vehicles and material handling robots are Daihen, WiTricity, and ENRX.

Meanwhile, cellphone charging pad-style wireless chargers also have plenty of business applications other than ease of use. Mojo Mobility, for example, offers charging systems designed to work in sterile medical environments.

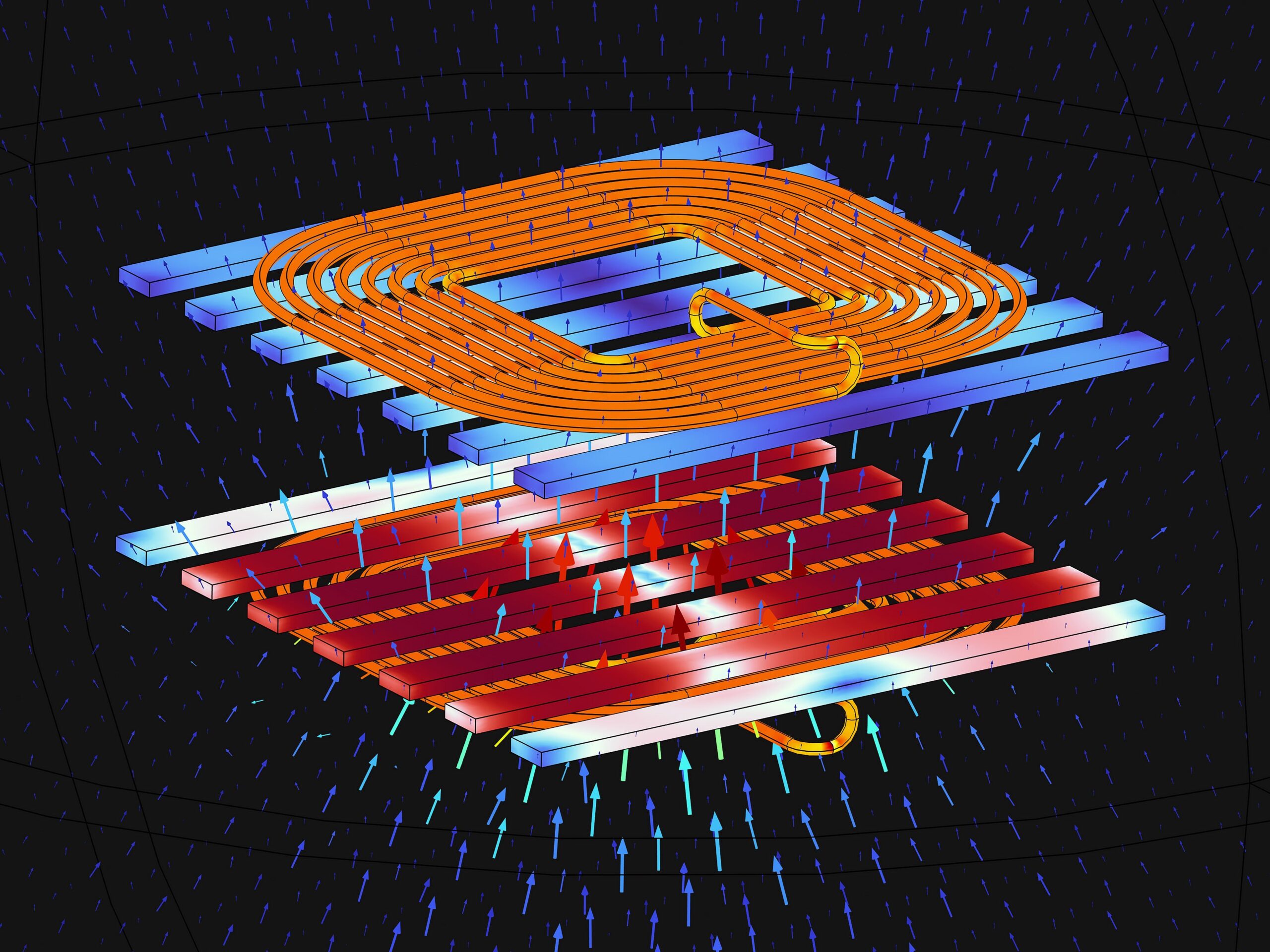

Ambient IoT and medium-range charging

The most common type of ambient IoT is that powered by solar cells, where no power transmission is required at all. For example, ambient IoT is already reshaping agriculture, with solar-powered sensors placed in fields, greenhouses, and livestock areas, according to a September report from Omdia. Small devices can also be powered by motion or body heat.

Transmitted wireless power, however, is more predictable and reliable and can work in a wider variety of environments — as long as the device is within range of the power transmitter or has a battery backup for when it’s not. Medium-range charging can work at a distance of a few inches to several yards or more. The less power the device requires, and the bigger its antenna, the longer the distance it can support.

“It’s really pushing IoT to the next level,” says Omdia analyst Shobhit Srivastava.

One popular use case is for sensors that are placed in locations where it’s not convenient to change batteries, he says, such as logistics. For example, Wiliot’s IoT Pixel is a postage stamp-sized sticker powered by radio waves that works at a range of up to 30 feet. Sold in reels, the price is as low as 10 cents a sensor when bought in bulk. The sensors can monitor temperature, location, and humidity and communicate this information to a company network via Bluetooth.

Sensors such as these can be attached to pallets to track its location, says Srivastava. “People in Europe are very conscious about where their food is coming from and, to comply with regulations, companies need to have sensors on the pallets,” he says. “Or they might need to know that meat has been transported at proper temperatures.” The smart tags can just be slapped on pallets, he says. “This is a very cheap way to do this, even with millions of pallets moving around,” he says.

The challenge, Srivastava says, is that when the devices are moving from trucks to logistics hubs, to warehouses, and to retail stores, “they need to connect to different technologies.”

Plus, all this data needs to be collected and analyzed. Some sensor manufacturers also offer cloud-based platforms to do this — and charge extra for the additional services.

One wireless power company, Energous, is doing just that, with an end-to-end ambient IoT platform consisting of wirelessly powered sensors, RF-based energy transmitters, and cloud-based monitoring software. Their newest product, the e-Sense Tag, was announced in June. The company has sold over 15,000 transmitters, says Giampaolo Marino, senior vice president of strategy and business development, and includes two Fortune 10 companies — one in retail IoT and one in logistics and transportation — among its customers.

The new tags will cost around $5 each, though the price is subject to change as the product is commercialized, Marino says. It’s a bit pricier than the disposable tags that cost under $1 each. But they will last for years, he adds, and can be reprogrammed.

“Three years ago, it was science fiction,” Marino says. “Today, it’s something we’re deploying.” It’s similar to how we went from cable internet to Wi-Fi everywhere, he says.

One use case that we’re not seeing yet for this kind of medium-range power transmission is factory robots. “We are far away from that,” says Omdia’s Srivastava. “The use cases are for low-power devices only.”

Similarly, smartphones are also energy-hungry devices with their big displays and other components that draw power, he says. “So, smartphones won’t be ambient powered in any hear future,” he says. “But small wearables, like wristbands in a hospital, can be ambient powered.”

Like a warehouse, a hospital is a controlled physical location where power stations can be installed to provide power to the IoT devices, enabling a wide variety of applications, such as monitoring heart rates, respiration, and other key health metrics.

Who’s in charge of wireless power networks?

Is wireless power transmission a networking task that falls within the purview of the IT department, or is it handled on the operational or business unit level? According to Srivastava, that depends on the scale of the deployment. “It’s a smaller deployment, with one or two locations to track, it might just say with, say, the logistics team,” he says.

But for larger deployments, with thousands of devices, ambient IoT is about more than just the power — there’s also the data transmission. “Then the network and security teams should be involved,” he says.

Other issues that might come up beyond data security include electromagnetic interference and regulatory compliance for RF exposure.

According to Omdia’s Srivstava and Energous, some of the notable vendors in the space are: Everactive (wireless ambient IoT devices); Wiliot (battery-free IoT pixel tags); HaiLa Technologies (low power wireless semiconductor); ONiO (self-powered, batteryless solutions); Atma.io from Avery Dennison atma.io (connected product cloud); EnOcean SmartStudio (sensor and data management); SODAQ (low power hardware platforms); Lightricity (integration of energy-harvesting solutions into IoT systems); SML Group (retail RFID solution integrators); Sequans (integration of cellular IoT connectivity into ambient IoT systems); Powercast (both inductive and radio power transmission); Ossia (RF power using the FCC-approved Cota wireless power standard); and Minew (Bluetooth bridge and gateway to support Wiliot IoT Pixels).

Laser charging

For longer distances, lasers are the way to go.

Lasers can be used to power drones and other aerial craft or collect power from remote wind turbines. It can also be used to send power to cell towers in areas where power cables are impractical to deploy.

In May, DARPA achieved a new wireless power transmission record, recording more than 800 watts of power at a distance of over five miles. This technology can even collect power from space-based solar collectors and beam it down to Earth. In fact, it’s a bit easier to beam power up and down since there’s less atmosphere to get in the way. Caltech’s Space Solar Power Project demonstrated this in 2023.

In space, there are no day-night cycles, no seasons, and no cloud cover, meaning that solar panels can yield eight times more power than they can down on Earth. The idea is to collect power, transform it into electricity, convert it to microwaves, and transmit it down to where it’s needed, including locations that have no access to reliable power.

In April, startup Aetherflux announced a $50 million funding round and plans to have its first low-Earth orbit test in 2026. China is currently working on a “Three Gorges dam in space” project, which will use super heavy rockets to create a giant solar power station in space, according to the South China Morning Post.

The European Space Agency is expected to make a decision at the end of this year on proceeding with its own space-based solar power project, called SOLARIS.

The same technology can also be used to transmit power from one satellite to another, and we’re already seeing a race to build a power grid in outer space.

Star Catcher Industries plans to build a space-based network of solar power collectors that will concentrate solar energy and then transmit it to other satellites, meaning that companies will be able to send up more powerful satellites without expanding their physical footprint. On-the-ground testing was conducted earlier this year, and the first in-orbit test will take place in 2026.

“Demand is growing exponentially for small satellites that can do more, from onboard processing to extended-duration operations,” said Chris Biddy, CEO at satellite manufacturer Astro Digital, which became Star Catcher’s first customer in September.

🛸 Recommended Intelligence Resource

As UAP researchers and tech enthusiasts, we’re always seeking tools and resources to enhance our investigations and stay ahead of emerging technologies. Check out this resource that fellow researchers have found valuable.

→ EHarmony